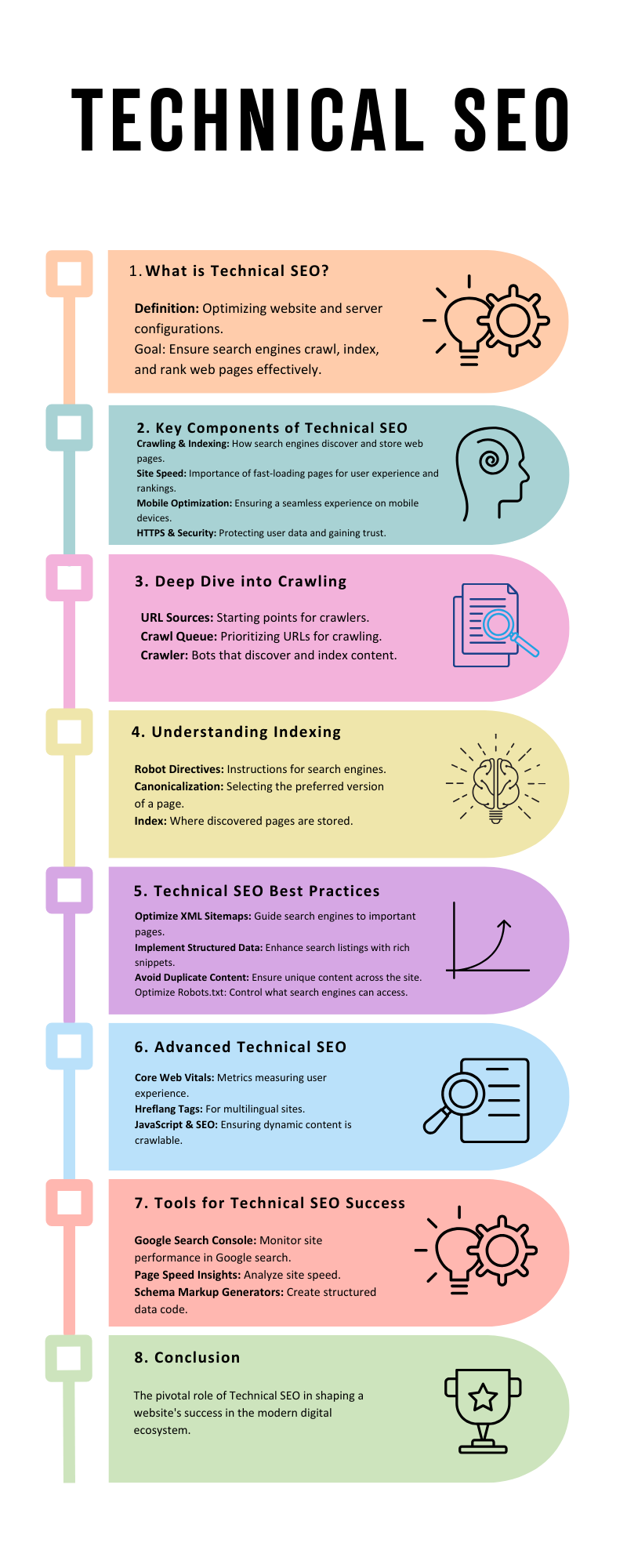

Technical SEO is a type of SEO that is defined by configurations that can be implemented to the website and server (e.g. page elements, HTTP header responses, XML Sitemaps, redirects, metadata, etc.). To be clear, Search Engine Optimization (SEO) is the process of understanding searcher behavior and audiences and optimizing web content to deliver a relevant experience.

It includes essential elements of technical web mastering, including providing search engines with directives, annotations, and signals for them to effectively crawl, index, and therefore rank a web page. This process ultimately results in increased search traffic and revenue.

What is technical SEO?

Technical SEO is the process of optimizing your webpage to aid search engines such as Google find, crawl, understand, and index your pages. The goal is to improve rankings by being found. Technical SEO work has either a direct or an indirect impact on search engine crawling, indexing, and ranking. However, it doesn’t include analytics, keyword research, backlink profile development, or social media strategies.

Is Technical SEO complicated?

It depends. The basics aren’t very difficult to grasp. However, sometimes one can find advanced technical SEO as a whole complicated and hard to fathom. I will try to keep things simple for this technical SEO guide.

How does crawling work?

Crawlers get hold of pages and make use of the links on those pages to find even more pages. This lets them discover stuff on the web.

Let’s talk about the steps involved in this system:

URL sources: A crawler needs to begin somewhere. Usually, they build a checklist of all the URLs they discover with the help of links on pages. Sitemaps in the form of other users or systems having the list of pages form a secondary network, to find more URLs.

Crawl queue: The crawl queue prioritizes adding all the URLs that need crawling or re-crawling. It is a structured catalog of URLs that Google wants to crawl.

Crawler: A web crawler, or spider, is a type of bot that is typically operated by search engines like Google or Bing. Their purpose is to index the content of websites all across the internet so that those websites can appear in search engine results.

Processing systems: These are various systems that handle canonicalization, just like a browser transfers and loads pages to the renderer, and processes the pages to get more URLs to crawl.

Renderer: The renderer loads a page like a browser would with JavaScript and CSS files as well. The reason for doing this is to help Google check what most users will see.

Index: The index is where your discovered pages find storage. After a crawler finds a page, the search engine renders it just like a browser would. In the process of doing so, the search engine analyzes the page’s contents. Index stores all of that information.

Crawl controls

Robots.txt: A robots.txt file tells search engine crawlers which URLs the crawlers can access on your site. This is used mainly to avoid overloading your site with requests. However, it is not a mechanism to keep a web page out of Google.

Crawl rate: In robots.txt there’s a crawl-delay directive you can use that many crawlers support and lets you set how frequently they can crawl pages. Unfortunately, Google doesn’t care about this. For Google, you have to change the crawl rate in Google Search Console.

Access restrictions: If you want the page to be accessible to some users but not search engines, then you probably want one of these 3 options;

-

- A form of login system;

-

- HTTP Authentication (access requires a password)

-

- IP Whitelisting (this allows only specific IP addresses to access the pages)

This type of setup is best for stuff like internal networks, content that’s only for members, or for staging, test, or development sites. It allows a group of users to access the page, but searches won’t be able to access them and thus will not index the pages.

How to see crawl activity?

Especially for Google, Google Search Console Crawl Stats report is the easiest way to spot what they’re crawling. This provides you with more information about how they’re crawling your website. If you want to view all crawl activities on your website, you need to access the server logs and probably use a tool to better analyze the data. This can get fairly advanced, but if your hosting is in possession of a control panel like cPanel, you should have access to raw logs and some aggregators like Awstats and Webalizer.

Crawl adjustments

Each website is going to have a different crawl budget, which is a conglomeration of how Google wants to crawl a site and how much crawling your site allows. More popular pages and pages that change frequently will be crawled more, and pages that don’t seem to be popular or well-linked will be crawled less frequently.

In case crawlers find signs of stress while crawling your website, they’ll typically slow down or even stop crawling until conditions approve. After pages are crawled, they’re rendered and sent to the index. The index is the master list of pages that can be returned for search queries.

Understanding indexing

Let’s talk about how to be sure that indexation takes place for your pages and their needs.

Robot directives: A robot Meta tag is an HTML snippet that tells search engines how to crawl or index a certain page. It’s placed into the <head> section of a web page, and looks something like this:

<Meta name=”robots” content=”noindex” />

Canonicalization: When there are multiple versions of the same page, Google will select one to store in their index. This process is called canonicalization and the URL selected as the canonical will be the one Google shows in search results. There are many different signals they use to select the canonical URL including:

-

- Canonical tags

-

- Duplicate pages

-

- Internal link

-

- Redirects

-

- Sitemap URLs

The easiest way to see how Google has indexed a page is to use the URL Inspection Tool in Google Search Console. It will show you the Google-selected canonical URL.

Quick wins for technical SEO strategy

It’s difficult to prioritize things for SEOs. There are many practices, but some changes will have more of an impact on your rankings and traffic than others. They also help in differentiating between white hats vs. black hats vs. grey hats SEO.

I’d recommend prioritizing the following:

Check for indexing

Make sure that Google can index the pages you want people to find. You can check the Indexability report in Site Audit to find pages that can’t be indexed and the reasons why. It’s free in Ahrefs Webmaster Tools.

Reclaim lost links

Websites tend to change their URLs over the years. In many cases, these old URLs have links from other websites. If they’re not redirected to the current pages then those links are lost and no longer count for your pages. It’s not too late to do these redirects and you can quickly regain any lost value. Think of this as the fastest link-building you’ll ever do.

Site explorer -> yourdomain.com -> Pages -> Best by Links -> add a “404 not found” HTTP response filter. I usually sort this by “Referring Domains”.

Add internal links

Internal links are links from one page on your site to another page on your site. They help in finding your pages and also help the pages rank better. There’s a tool within Site Audit called “Link opportunities” that helps you quickly locate these opportunities.

Add mark-up schema

Schema markup is code that helps search engines understand your content better and powers many features that can help your website stand out from the rest in search results. Google search has a search gallery that shows the various search features and the schema needed for your site to be eligible.

Additional technical projects

Page experience signals

These are lesser ranking factors, but still, things you want to look at for the sake of the users. They cover aspects of the website that impact user experience (UX).

Core web vitals

Google’s Page Experience uses core web vitals as speed metrics to measure user experience. The metrics measure visual load with Largest Contentful Paint (LCP), visual stability with Cumulative Layout Shift (CLS), and interactivity with First Input Delay (FID).

HTTPS

HTTPS protects the communication between your browser and server from being intercepted and tampered with by attackers. This provides confidentiality, integrity, and authenticity to the vast majority of today’s WWW traffic. You want your pages loaded over HTTPS and not HTTP. Any website that shows a lock icon in the address bar is using HTTPS.

Mobile-friendliness

Simply put, this checks if web pages display properly and are easily used by people on mobile devices. How will you know if your site is mobile-friendly? Check the “Mobile Usability” report in Google Search Console. This report tells you if any of your pages have issues related to mobile-friendliness.

Safe browsing

These are checks to make sure pages aren’t deceptive, don’t include malware, and don’t have any harmful downloads.

Interstitials

Interstitials block viewing of content. These are pop-ups that cover the main content that users may have to interact with before they go away.

Hreflang – for multiple languages

Hreflag is an HTML attribute used to specify the language and geographical targeting of a webpage. If you have multiple versions of the same page in different languages, you can use the hreflang tag to tell search engines like Google about these variations. This helps them to serve the correct version to their users.

General maintenance / Website health

These tasks won’t have a huge effect on your rankings but are usually provide a better user experience. Here are a few common technical SEO issues.

Broken links

Broken links are links on your site that point to non-existent resources – these can be either internal (i.e., to other pages on your domain) or external (i.e., to pages on other domains.)

You can find broken links on your website quickly with Site Audit in the links report. It is free in Ahrefs Webmaster Tools.

Redirect chains

Redirect chains are a series of redirects that happen between the initial URL and the destination URL.

You can find redirect chains on your website quickly with Site Audit in the Redirects report. It is free in Ahrefs Webmaster Tools.

Technical SEO tools

These tools help you improve the technical aspects of your website.

Google Search Console

Google Search Console (previously Google Webmaster Tools) is a free service from Google that helps you monitor and troubleshoot your website’s appearance in their search results.

Use it to find and fix technical errors, submit sitemaps, see structured data issues, and more.

Bing and Yandex have their versions and so do Ahrefs. Ahrefs Webmaster Tools is a free tool that will help you improve your website’s SEO performance. It allows you to:

- Monitor your website’s SEO health

- Check for 100+ SEO issues

- View all your backlinks

- See all the keywords you rank for

- Find out how much traffic your pages are receiving

- Find internal linking opportunities

- It’s our answer to the limitations of Google Search Console

Google’s Mobile-friendly test

Google’s Mobile-Friendly test checks how easily a visitor can use your page on a mobile device. It also identifies specific mobile-usability issues like text that’s too small to read, the use of incompatible plugins, and so on.

The mobile-friendly test shows what Google sees when they crawl the page. You can also use the Rich Results Test to see the content Google sees for desktop or mobile devices.

Chrome DevTools

Chrome DevTools is Chrome’s built-in web page debugging tool. Use it to debug page speed issues, improve web page rendering performance, and more. From a technical SEO standpoint, it has endless uses.

Ahrefs Toolbar

Ahrefs SEO Toolbar is a free extension for Chrome and Firefox that provides useful SEO data about the pages and websites you visit.

Its free features are:

-

- On-page SEO report

- Redirect tracer with HTTP Headers

- Broken link checker

- Link highlighter

- SERP positions

In addition, as an Ahrefs user, you get:

-

- SEO metrics for every site and page you visit, and for Google search results

- Keyword metrics, such as search volume and keyword difficulty, directly in SERP.

- SERP results in export

PageSpeed Insights

PageSpeed Insights analyses the loading speed of your web pages. Alongside the performance score, it also shows actionable recommendations to make pages load faster.

I’m sure by now you have a pretty good idea about technical SEO.

So are you looking for a creative digital marketing agency in Mumbai, Delhi, or anywhere in India that specializes in providing customizable online marketing services? One of the best internet marketing companies amongst them is Banyanbrain.

We’re are one of the top SMO service providers, providing optimized digital marketing services specifically designed to cater to your organization’s needs, whether it’s appearing more often in organic searches, paid advertising, social advertising, or designing attractive UI. Through our best and SEO services we help your website’s ranking reach the top SERPs and provide you with maximum benefits.